My main research stems from an interest in spatial and temporal modelling in computer vision. This is applied in many domains - I'm an interdisciplinary researcher who's interested in all sorts of application areas. A broad indication of the kinds of research domains and the relevant questions to those domains is given below. If you'd like to see some of my outputs, the publications page has links to peer reviewed journal and conference papers.

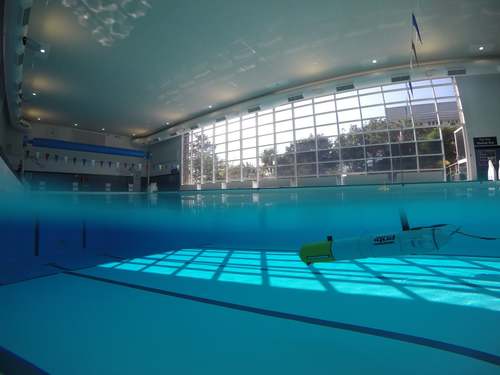

Stop press! (September 2021) I've got a robotic submarine. It's a SPARUS II from IQUA Robotics, a spinout company based near the University of Girona. We have just had training in the use of this autonomous underwater vehicle and it's great. Here's a pic of it in the university swimming pool:

Questions.... How can we model change in plants? How can we measure development (growth, spatial organisation)? What kinds of imaging platforms are appropriate for this? Are there low cost solutions to the phenotyping problem? (aka "how far can we get with a £50 webcam?") How can we register images in different modalities (infra-red, spectroscopy, visible spectrum) when objects grow? How can we deal with occlusion?

On this research strand I'm collaborating heavily with the UK's National Plant Phenomics Centre (in Aberystwyth), had an EPSRC first grant looking at 2.5d modelling of plant structure (employing Jon Bell as an RA), and had a recently completed PhD student Shishen Wang looking in particular at imaging of Arabidopsis plants. I am also a member of the new "Technology Touching Life" network project PhenomUK.

Questions... How do we navigate through space? How do we perceive space? How does geography constrain the way we move around? What cues do we use when navigating? How can we incorporate this into artificial systems (either static video analysis systems, or active perceivers like robots)? How can we deal with occlusions and shadows?

On this research strand I'm working with robots, including Idris (a 400kg autonomous wheeled vehicle), and a robotic boat platform with aerial blimp-mounted camera. There have been two PhD students in this domain - I was first supervisor for Max Walker (robot boats, raspberry pis, and blimps!); and was second supervisor for Juan Cao. I've also got an ongoing project looking at spatial reasoning and shadows, working with Paulo Santos in FEI Sao Bernado do Campo, Brazil; this was supported by a British Council Research Exchange in 2006 and continues to this day.

Questions... How can we model change over time? How can we register images in different modalities? Can we use groupwise methods? How do we deal with 2D and 3D datasets at the same time?

Applications in this area have been in prostate cancer research (I was second supervisor for Jonathan Roscoe, looking at 2D/3D imaging for prostates) and in skin quality assessment (I was second supervisor for Alasanne Seck who used lightstage imaging to determine skin quality from high definition 3D images. We are trying to build a lightstage in Aberystwyth.

Questions... Can we work out how an artist's style changes over time? Can we locate a painting in geographical space? What can we tell about location and style from a photograph of an artwork?

This work was in collaboration with people at the National Library of Wales, namely Lloyd Roderick, a PhD student who took a digital humanities approach to the landscape painter Sir John "Kyffin" Williams. This work also involved Lorna Hughes, who is now at Glasgow.

I'm also interested in general computer vision problems and applications, particularly ones which involve the analysis of difficult video (low frame-rate, low resolution, underwater, too close to the object, multiple occlusions...), such as data from webcams, camera phones and so on. If you're interested in collaborating on this sort of stuff, get in touch on hmd1@aber.ac.uk.